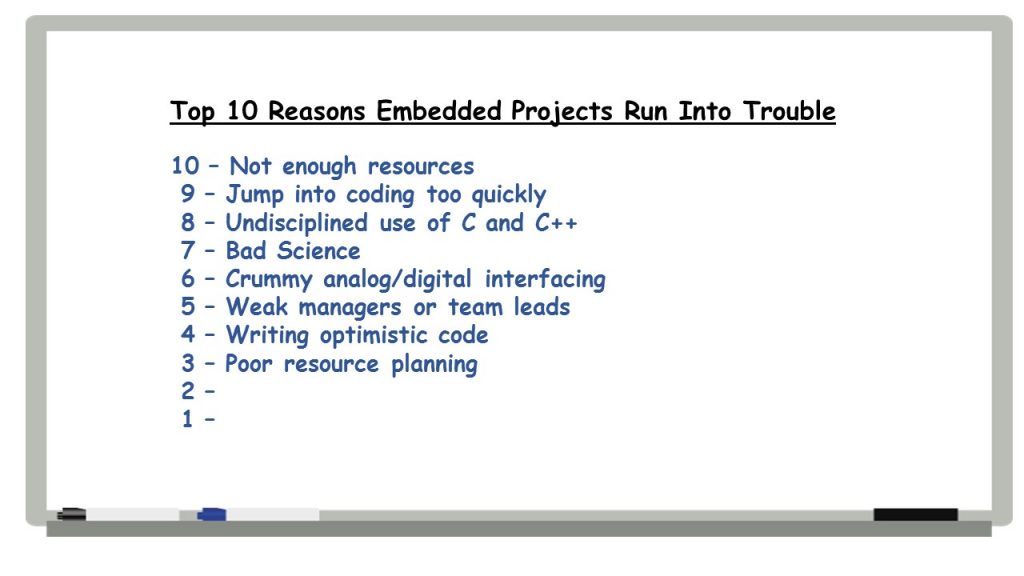

For the last month or so, Embedded.com has been running an excellent series on the Top Ten reasons embedded projects run into trouble, according to Jack Ganssle, an embedded systems guru. I’ve been having a good time counting down with Jack. As I’m writing this summary of his third and fourth reasons, reasons 1 and 2 have not yet been revealed. But stay tuned. I’ll be getting to them in another two weeks.

For the last month or so, Embedded.com has been running an excellent series on the Top Ten reasons embedded projects run into trouble, according to Jack Ganssle, an embedded systems guru. I’ve been having a good time counting down with Jack. As I’m writing this summary of his third and fourth reasons, reasons 1 and 2 have not yet been revealed. But stay tuned. I’ll be getting to them in another two weeks.

Anyway, here goes reasons 4 and 3.

4 – Writing optimistic code

Jack starts off this piece in a very humorous way: with a store receipt for a couple of small sailboat parts that showed a charge of $84 Trillion.

This is an example of optimistic programming. Assuming everything will go well, that there’s no chance for an error.

I don’t know if the appearance of an obvious error like this is an example of optimistic programming. But it’s sure an example of poor testing. But maybe they’re one and the same thing. Anyway, Jack points out that, given that “the resources in a $0.50 MCU dwarf those of the $10m Univac of yore… We can afford to write code that pessimistically checks for impossible conditions.” Which we need to do given that “when it’s impossible for some condition to occur, it probably will.” Let’s call this point Ganssle’s Law: the coding equivalent of Murphy’s Law.

Giving advice specific to embedded programming, he suggests that almost every switch statement should have a default case And that assert macros should be universally used:

Done right, the assertion will fire close to the source of the bug, making it easy to figure out the problem. Admittedly, assert does stupid things for an embedded system, but it’s trivial to rewrite it to be more sensible.

Jack’s bottom line:

The best engineers are utter pessimists. They always do worst-case design.

Considering myself a pretty good engineer and a not especially pessimistic sort of guy, I hadn’t thought of it this way. But I’m definitely down with doing worst-case design.

3 – Poor resource planning

Embedded systems developers face challenges that software developers don’t have to deal with in a world of cheap memory, cheap storage, etc. And that’s resource constraints:

With firmware, your resource estimates have got to be reasonably accurate from nearly day one of the project. At a very early point we’re choosing a CPU, clock rate, memory sizes and peripherals. Those get codified into circuit boards long before the code has been written. Get them wrong and expensive changes ensue.

But it’s worse than that. Pick an 8-bitter, but then max out its capabilities, what then? Changing to a processor with perhaps a very different architecture and you could be adding months of work. That initial goal of $10 for the cost of goods might swell a lot, perhaps even enough to make the product unmarketable.

This ties back to an earlier reason that Jack listed for why embedded projects can go awry. His Number 9: jumping into coding too quickly. The answer to that one was to pay a lot of careful attention to requirements. Same thing applies here, but with the problem that you’re typically subject to financial constraints on what firmware to pick. That and the fact that you also need to avoid built in obsolescence. So:

A rule of thumb suggests, when budgets allow, doubling initial resource needs. Extra memory. Plenty of CPU cycles. Have some extra I/O at least to ease debugging.

Next post, we’ll wrap up with the reasons that get Jack’s nod for first and second place.

Here are the links to my prior posts summarizing Jack’s Reasons 10 and 9, Reasons 7 and 8, and Reasons 6 and 5.