Whatever your feelings are about AI – It’s all great! It’s going to kill us all! You gotta take the bad with the good! It all depends! I’m not quite sure – yet! – most of us recognize that while AI is growing as a force, and will revolutionize entire industries, it does consume an awful lot of energy, leading to concerns about whether it is environmentally sustainable.

Consider these observations, based on the work of the International Energy Agency (IEA)

One of the areas with the fastest-growing demand for energy is the form of machine learning called generative AI, which requires a lot of energy for training and a lot of energy for producing answers to queries. Training a large language model like OpenAI’s GPT-3, for example, uses nearly 1,300 megawatt-hours (MWh) of electricity, the annual consumption of about 130 US homes. According to the IEA, a single Google search takes 0.3 watt-hours of electricity, while a ChatGPT request takes 2.9 watt-hours. (An incandescent light bulb draws an average of 60 watt-hours of juice.) If ChatGPT were integrated into the 9 billion searches done each day, the IEA says, the electricity demand would increase by 10 terawatt-hours a year — the amount consumed by about 1.5 million European Union residents. (Source: Vox)

electricity, the annual consumption of about 130 US homes. According to the IEA, a single Google search takes 0.3 watt-hours of electricity, while a ChatGPT request takes 2.9 watt-hours. (An incandescent light bulb draws an average of 60 watt-hours of juice.) If ChatGPT were integrated into the 9 billion searches done each day, the IEA says, the electricity demand would increase by 10 terawatt-hours a year — the amount consumed by about 1.5 million European Union residents. (Source: Vox)

Given stats like this, and the fact that demand for AI – and the power it consumes along the way – is rapidly growing, it’s no wonder that engineers and researchers are focusing on ways tamp down/slow down AI’s energy demands.

Writing recently in the EE Times, Simran Khoka notes that “data centers, central to AI computations, currently consume about 1% of global electricity—a figure that could rise to 3% to 8% in the next few decades if present trends persist,” adding that there are other environmental impacts that AI brings with it, such as e-waste and the water usage required for data center cooling. She notes that IBM is one of the leaders in creating analog chips for AI apps. These chips deploy phase-change memory (PCM) technology.

PCM technology alters the material phase between crystalline and amorphous states, enabling high-density storage and swift access times—qualities essential for efficient AI data processing. In IBM’s design, PCM is employed to emulate synaptic weights in artificial neural networks, thus facilitating energy-efficient learning and inference processes.

IBM is not alone. Khoka cites a couple of the little guys: Mythic, which:

…has engineered analog AI processors that amalgamate memory and computation. This integration allows AI tasks to be executed directly within memory, minimizing data movement and enhancing energy efficiency.

She also writes about Rain Neuromorphic which is developing chips “process signals continuously and perform neuronal computations, making them ideal for creating scalable and adaptable AI systems that learn and respond in real time.”

Applications well suited to analog chips include edge computing, neuromorphic computing, and AI inference and training.

A principal challenge that switching to analog chips presents is ensuring that they have the same precision and accuracy that digital chips yield. Another hurdle is that, at present, the infrastructure behind AI systems is digital.

It’s no surprise that MIT is keeping its eye on ways to reduce the energy consumption of voracious AI models. MIT’s Lincoln Lab Supercomputing Cener (LLSC) is finding that by capping power and slightly increasing task time, energy consumption of GPUs can be substantially reduced. The trade-off: tasks may take 3 percent longer, but energy consumption is lowered by 12-15 percent. With power-capping constraints in place, the Lincoln Lab supercomputers are also running a lot cooler, decreasing demand placed on cooling systems – and keeping hardware in service longer. (And something as simple as running jobs at night, when it’s cooler, or in the winter, can greatly reduce cooling needs.)

LLSC is also looking at ways to improve how efficiently AI models are trained and used.

When training models, AI developers often focus on improving accuracy, and they build upon previous models as a starting point. To achieve the desired output, they have to figure out what parameters to use, and getting it right can take testing thousands of configurations. This process, called hyperparameter optimization, is one area LLSC researchers have found ripe for cutting down energy waste.

“We’ve developed a model that basically looks at the rate at which a given configuration is learning,” [LLSC senior staff member Vijay] Gadepally says. Given that rate, their model predicts the likely performance. Underperforming models are stopped early. “We can give you a very accurate estimate early on that the best model will be in this top 10 of 100 models running,” he says.

Jettisoning models that are slow learners has resulted in a whopping “80 percent reduction in energy used for model training.”

Whatever your feelings about AI, it’s comforting to know that there are plenty of folks out there trying to ensure that AI’s power consumption will be held in check.

Image sourc: Emerj Insights

Critical Link announces Best in Show awards for two of the company’s latest SOMs.

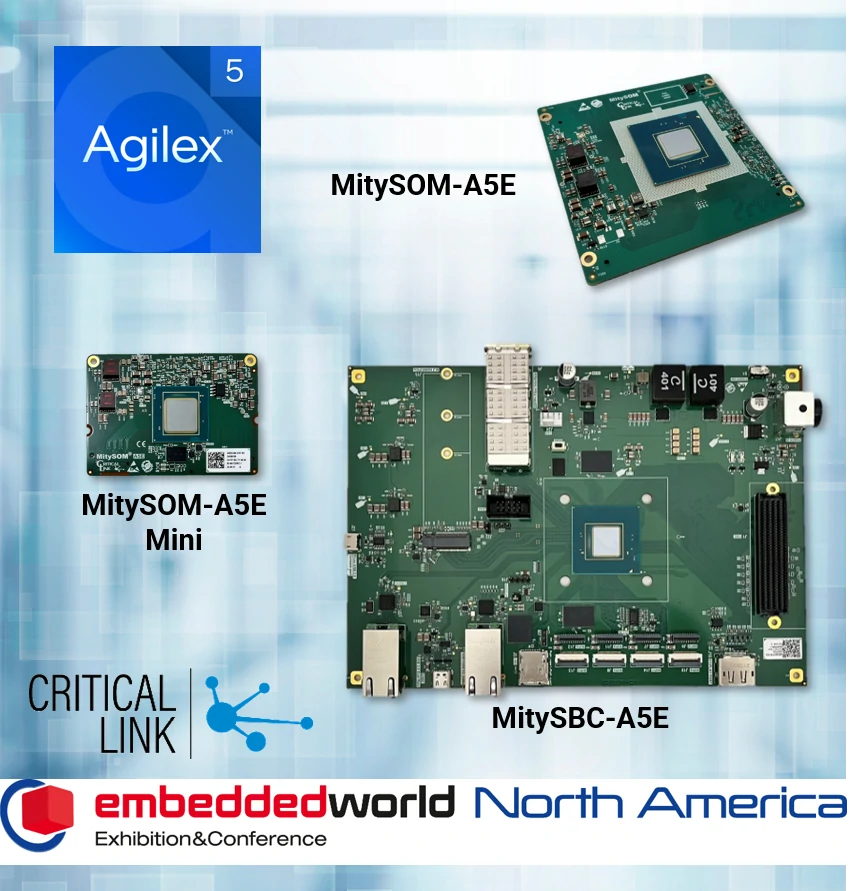

Critical Link announces Best in Show awards for two of the company’s latest SOMs.  Next up is Critical Link’s Agilex 5 FPGA family of products, with three powerful offerings: a full-featured system on module, a smaller SOM to address size or budget constrained applications, and a single board computer for ultimate flexibility and speed. The MitySOM-A5E family offers high performance, lower power, and small form factors at the edge.

Next up is Critical Link’s Agilex 5 FPGA family of products, with three powerful offerings: a full-featured system on module, a smaller SOM to address size or budget constrained applications, and a single board computer for ultimate flexibility and speed. The MitySOM-A5E family offers high performance, lower power, and small form factors at the edge. Visit www.criticallink.com to explore our available products and engineering services. Reach out to us directly anytime at info@criticallink.com to discuss how we can support your next project.

Visit www.criticallink.com to explore our available products and engineering services. Reach out to us directly anytime at info@criticallink.com to discuss how we can support your next project.

Add Critical Link booth #3065 to your Embedded World must-see list and check out a live demonstration of AI at the edge using our latest family of Qualcomm-based system on modules!

Add Critical Link booth #3065 to your Embedded World must-see list and check out a live demonstration of AI at the edge using our latest family of Qualcomm-based system on modules!

We’re excited to get to St Louis this week for another

We’re excited to get to St Louis this week for another

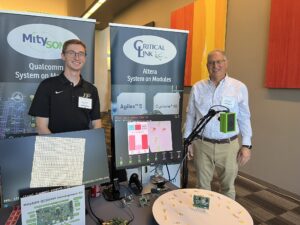

Thank you to the

Thank you to the

Join Omar Rahim and Critical Link in Michigan on September 18! Learn about leveraging Asymmetric Multiprocessing and

Join Omar Rahim and Critical Link in Michigan on September 18! Learn about leveraging Asymmetric Multiprocessing and